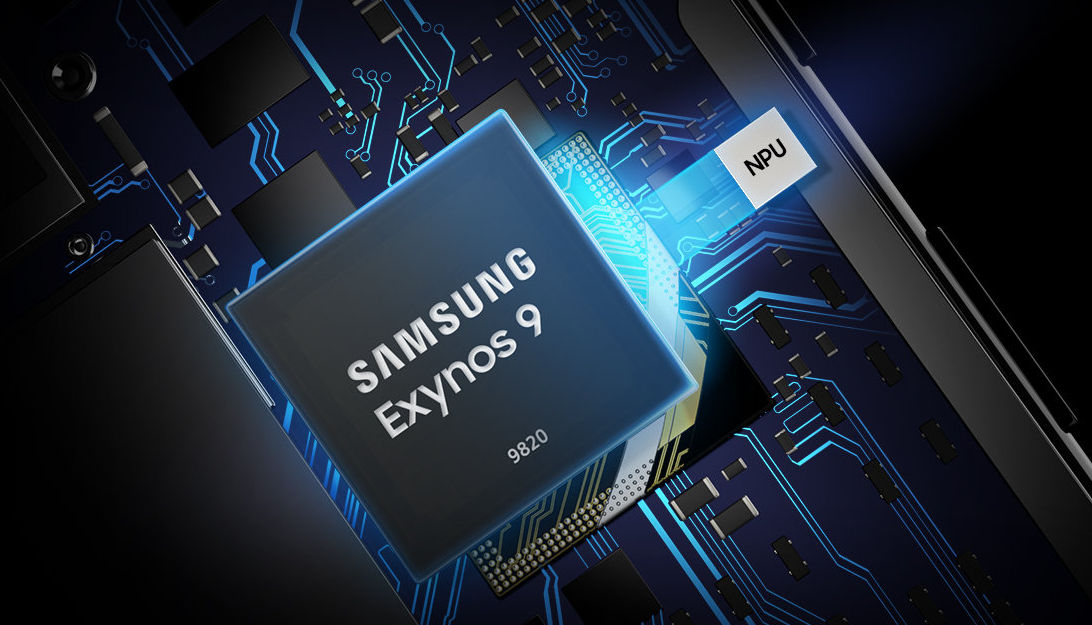

Earlier last month, Samsung expanded its proprietary NPU technology development and also delivered an update to the same. At the Computer Vision and Pattern Recognition (CVPR), the company introduced its On-Device AI lightweight algorithm and today, Samsung has announced an update to the same. Samsung has announced that the company has successfully developed On-Device AI lightweight technology that performs computations 8 times faster than the existing 32-bit deep learning data for servers. A core feature of On-Device AI technology is its ability to compute large amounts of data at a high speed without consuming excessive amounts of electricity. How On-Device AI lightweight technology works? The technology determines the intervals of the significant data that influence overall deep learning performance through learning and Samsung Advanced Institute of Technology (SAIT) is said to have run experiments that successfully demonstrated how the quantization of an in-server deep learning algorithm in 32 bit intervals provided higher accuracy than other existing solutions when computed into levels of less than 4 bits. The computation results using the QIL process can achieve the same results as existing processes can while using 1/40 to 1/120 fewer transistors when the data of a deep learning computation is presented in bit groups lower than 4 ...

Earlier last month, Samsung expanded its proprietary NPU technology development and also delivered an update to the same. At the Computer Vision and Pattern Recognition (CVPR), the company introduced its On-Device AI lightweight algorithm and today, Samsung has announced an update to the same. Samsung has announced that the company has successfully developed On-Device AI lightweight technology that performs computations 8 times faster than the existing 32-bit deep learning data for servers. A core feature of On-Device AI technology is its ability to compute large amounts of data at a high speed without consuming excessive amounts of electricity. How On-Device AI lightweight technology works? The technology determines the intervals of the significant data that influence overall deep learning performance through learning and Samsung Advanced Institute of Technology (SAIT) is said to have run experiments that successfully demonstrated how the quantization of an in-server deep learning algorithm in 32 bit intervals provided higher accuracy than other existing solutions when computed into levels of less than 4 bits. The computation results using the QIL process can achieve the same results as existing processes can while using 1/40 to 1/120 fewer transistors when the data of a deep learning computation is presented in bit groups lower than 4 ...

Read Here»

Post a Comment Blogger Facebook

We welcome comments that add value to the discussion. We attempt to block comments that use offensive language or appear to be spam, and our editors frequently review the comments to ensure they are appropriate. As the comments are written and submitted by visitors of The Sheen Blog, they in no way represent the opinion of The Sheen Blog. Let's work together to keep the conversation civil.