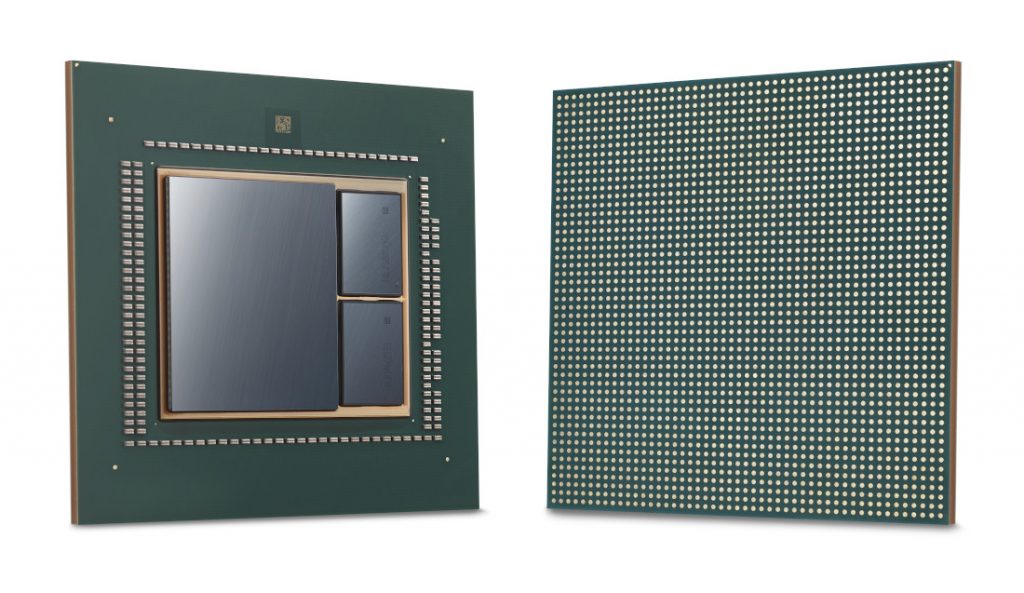

Baidu, a Chinese language internet search provider has partnered with Samsung Electronics to produce Baidu's first cloud-to-edge AI accelerator chip called Baidu KUNLUN. The chip is designed to power large scale AI workloads such as search ranking, speech recognition and more. Being one of its first foundry cooperation, the Baidu KUNLUN is built using Samsung's home-grown neural processor architecture called XPU on the 14nm process technology. The chip is designed workloads of the likes of image processing, search ranking, natural language processing, autonomous driving, and deep learning platforms. With improvements from Samsung's I-Cube (Interposer-Cube) package solution, the chip can support up to 512 GBps memory bandwidth, supplying up to 260 Tera operations per second at 150 watts. In its testing, the chip proved to be three times quicker than the conventional GPU/FPGA-accelerating model in processing natural language processing model. Commenting on the launch of the chip, OuYang Jian, Distinguished Architect of Baidu said: We are excited to lead the HPC industry together with Samsung Foundry. Baidu KUNLUN is a very challenging project since it requires not only a high level of reliability and performance at the same time, but is also a compilation of the most advanced technologies in the semiconductor industry. Thanks to ...

Baidu, a Chinese language internet search provider has partnered with Samsung Electronics to produce Baidu's first cloud-to-edge AI accelerator chip called Baidu KUNLUN. The chip is designed to power large scale AI workloads such as search ranking, speech recognition and more. Being one of its first foundry cooperation, the Baidu KUNLUN is built using Samsung's home-grown neural processor architecture called XPU on the 14nm process technology. The chip is designed workloads of the likes of image processing, search ranking, natural language processing, autonomous driving, and deep learning platforms. With improvements from Samsung's I-Cube (Interposer-Cube) package solution, the chip can support up to 512 GBps memory bandwidth, supplying up to 260 Tera operations per second at 150 watts. In its testing, the chip proved to be three times quicker than the conventional GPU/FPGA-accelerating model in processing natural language processing model. Commenting on the launch of the chip, OuYang Jian, Distinguished Architect of Baidu said: We are excited to lead the HPC industry together with Samsung Foundry. Baidu KUNLUN is a very challenging project since it requires not only a high level of reliability and performance at the same time, but is also a compilation of the most advanced technologies in the semiconductor industry. Thanks to ...

Read Here»

Post a Comment Blogger Facebook

We welcome comments that add value to the discussion. We attempt to block comments that use offensive language or appear to be spam, and our editors frequently review the comments to ensure they are appropriate. As the comments are written and submitted by visitors of The Sheen Blog, they in no way represent the opinion of The Sheen Blog. Let's work together to keep the conversation civil.